A One Act Festival in your Browser (WebXR)

After successfully producing live theater in virtual reality at HERE Fest last October and showcasing our process in a documentary film, we asked the dangerous question…should we do it again? Looking at trends, March 2021 looked like a history-making month for spatial computing and virtual performance as The Royal Shakespeare Company launched DREAM, TheUnderPresents remounted TEMPEST and SXSW went virtual.

#OnBoardXR

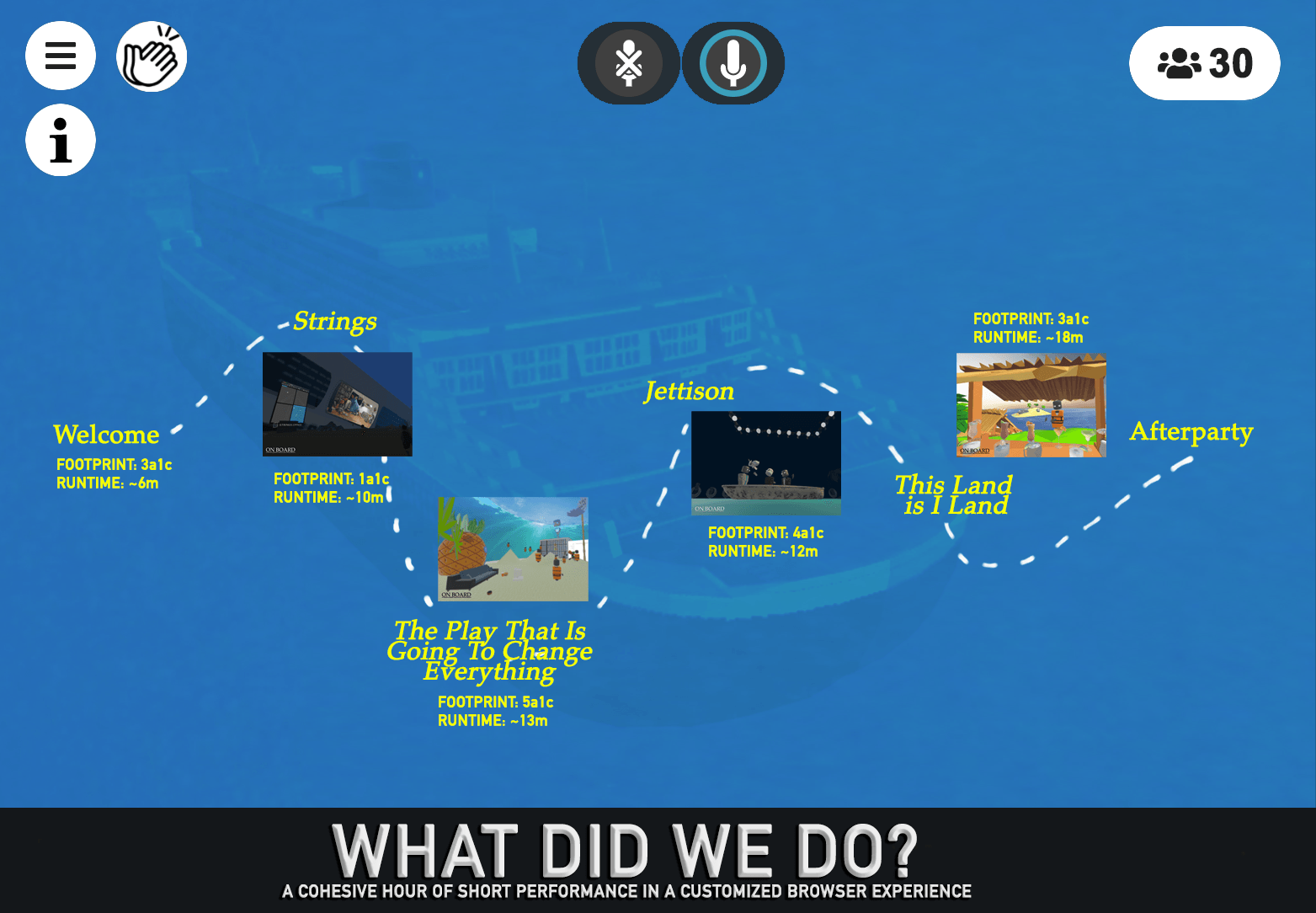

So, for the one year anniversary of the global shutdown of the entertainment industry, I partnered with ActiveReplica.io and Agile Lens to spin up a custom iteration of the Mozilla Hubs ecosystem which we called XRTHEATER.LIVE to present #OnBoardXR, a One Act Festival (runtime ~60m) produced, rehearsed, and performed entirely in web-based virtual reality, demonstrating several different modes and styles of fully-immersive, virtual performance with a customized toolkit and user interface.

I reached out to other creators at the bleeding edge of interactive, live performance about remounting their existing WebXR projects. Using a shared Google document and Mozilla Spoke, in a couple weeks we spun up four unique shows, each with a custom “stage,” tailored to their needs and artistic vision. This allowed communication and collaboration as we could jump into each other’s projects to help stress test, optimize and offer feedback.

Mozilla Hubs has a built-in “switch scene” feature that serendipitously “fades to black” to change the Room from one template to the next - just like scenic changes on a large scale show. This allowed us to transition the audience on a “dark ride” through all the pieces without leaving their single web browser.

Once we finalized everyone’s “Scene” on the free version of Mozilla Hubs, we did a “paper tech” to step through every cue and asset needed for the show. This gave us a master list to organize for stage management and run of show. Using artificial intelligence, I generated music cues to play during transitions through the blackouts to keep the audience immersed in the experience. With our master list, Jacob Erwin and his team tirelessly helped us migrate everyone’s show to the XRTHEATER.LIVE server and clean up the rough edges of the first VR scene (most of) our artists had ever designed.

Within two weeks, we had remounted four live experiences into a cohesive evening of theater - from a team who had never met each other - with a sold out run of shows, demonstrating a path to create and monetize the work of any live performer in browser-based virtual reality today.

ACT ONE

The evening began outside my free #FutureStages template. During last year’s run of Jettison, we learned that users tend to simulate the etiquette of known physical spaces in virtual worlds. Audiences expressed more confidence and comfort learning new controls within the familiar ritual of entering the theater, checking in at the box office, and finding their seat. It also discouraged side-questing when users organically understood their “role” in the theater-going experience.

As audiences crossed the Theater Lobby to enter the auditorium, they stepped onto the deck of a luxury cruise ship. This is similar to entering a black box theater that integrates the entire venue into the scenic design, but without the traditional limitations of physical architecture. I simply bisected my existing 3D model of the theater and merged it with a 3D model of a cruise ship.

To further optimize the server load and track our attendees, Active Replica created adorable default avatars so our participants were in matching life vests. This allowed our performers to stand out from the crowd, using their own custom avatars.

While on deck, our guests could mingle as Dasha Kittredge, Ari Tarr and myself roamed in-character as Pirates and Yacht Bros to literally “on board” the audience with best practices and technical troubleshooting. As we gave our Welcome speech, we announced a “practice transition” so everyone could experience the scene switch. This transitioned everyone to the exact same cruise ship, but the skybox was replaced with a 360 storm, plunging us into darkness and the beginning of our journey…

ACT TWO

As the lights came up, the audience found themselves “under the water” with broken bits of the (now sunk) cruise ship littering the ocean floor. Our Actor-Guides told the audience to help look for survivors, leading us to the wreckage where a dancer performed live through a port window.

I found Clemence Debaig’s work with Unwired Dance on Twitter and wrote her a direct message. She responded instantly and within a few hours, we had spun up a rehearsal room.

Her piece STRINGS prompts the audience to access a web app on their phones to trigger haptic bands on her limbs to influence her movement in real-time during her performance. We collaborated to integrate a screen-share of this web app next to her live video “under water” so the audience could choose whether or not to participate and still observe the process.

STRINGS was the perfect opening piece to simultaneously comfort new users with a simple web cam performance while disrupting the expectations of seasoned VR users who had never seen haptic technology incorporated into live dance. Through audience interactions, Clemence would ultimately break the glass and “swim” toward camera to cover the lens, as the music faded, taking us to another blackout….

ACT THREE

Still under water, the audience was transported to the hyper-saturated universe of Sponge Bob’s Bikini Bottom. A billboard showed us the interface of GPT-3 and a text prompt that had generated the entire script for our next play.

Isak Keller had emailed me to participate in his own upcoming festival of new plays written by artificial intelligence. I surprised him by asking if he had one that might be staged “under water.” Serendipitously, he’d prompted GPT-3 to write a very bizarre episode of Sponge Bob Square Pants and we quickly found Creative Commons 3D models that looked like environments and characters from the famous cartoon show. The actors had never performed in VR and didn’t have headsets, so they rehearsed and performed by puppeting their characters using their keyboard and mouse, and relying heavily on vocal performance.

This was, by far, the “heaviest” part of our pipeline, given a large cast with minimal technical background performing a text that acknowledged it was not “crafted” artistically by theater professionals, but instead by a text-based artificial intelligence. But the piece crucially celebrates and showcases the creative culture we wanted to capture to empower any artist to adopt the tools and technology.

ACT FOUR

The next transition brought the audience above the water, in a stripped down version of our original Jettison theater. Rather than switching between “modes,” this time our cast performed entirely as 3D avatars in headset, offering a true remount of the most successful elements of our previous production. As an homage to our original work, we chose to stick with the default avatars and 3D objects as a proof of concept for what any theater-maker could build without bespoke modeling or coding.

In the context of the evening, Jettison represented the most “traditional” remounting of a formal work of live theater, entirely in virtual reality with actors in three different cities. Since its publishing in One Acts of Note, the play has had a eclectic history with stagings in a site-specific venue (swimming pool), proscenium (Miami’s Arsht Center) and now (twice) in VR. Each production has been unique, but this specific staging was special as Nican Robinson, Nick Carrillo, Ashley Clements and I got the opportunity to approach and ground the work in what has now become a safe and familiar rehearsal room. This led to much more discovery, play and my own first moments truly “losing myself” in VR.

In the middle of our run, Jettison was also nominated for the Producer Guilds 2020 Innovation Award, making it an extremely special milestone for this incredible team.

As a personal anecdote, Nican’s physical mastery delivered a comedic beat so flawlessly during one run, I actually “broke” - something I rarely do on stage or screen. Later in the run, his audio failed, but he knew our movements so well, he was able to mime the entire show while our director, David Gochfeld, grabbed the script and read the lines into the room! The audience didn’t know! If that ain’t theater, I don’t know what is.

Many audience members found me on Twitter this week to share their surprise and appreciation for the unexpected catharsis and emotion of “watching real theater” after a year without physical venues. Pretty incredible to know that is what’s possible with two weeks and a twelve minute play.

ACT FIVE

Next, we introduced passengers to a stunning virtual island, developed by Active Replica for a corporate event with Dasha Kittredge (The Under Presents) and Ari Tarr (AdventureLab), who also performed the piece. The climax was an interactive and immersive psuedo-scavenger hunt of traversing the scenic landscape to save our characters from quicksand, a giant squid, and each other. The lights dimmed as they soared into the sunset.

AFTER PARTY

As the lights came up one final time, the audience was returned to the deck of the cruise ship with a sunset skybox, allowing them to mingle and discuss the performance with each other and any lingering actors.

We dropped a link to a Feedback Survey and a Curtain Call video of (most of) our team who chose to send videos of themselves taking a bow in front of their technical set up.

BACKSTAGE

The incredible talent on stage was exceeded only by the team behind the scenes. Kevin Laibson did all the heavy lifting, initially as our Line Producer during production meetings, our House Manager for ticket sales and audience onboarding, and then running the show cues and transitions with Alex Coulombe. Jacob Ervin and his team at Active Replica spun up a custom Mozilla Hubs Cloud instance and helped optimize each and every asset and audience consideration. Meanwhile, Roman Miletitch pushed a custom user interface to provide three tiers of access:

Simplified Audience Login

Customized Performer Toolkit

Stage Manager GUI Cue Board

This was done by assigning a “role” to each user that acted as a key-phrase during login. If a user did not have a key-phrase, they would default to the simplified audience login, stripped of all the buttons and interactions, except the preferences menu, microphone on/off, applause emoji, and an info button that revealed a full screen playbill.

When our performers logged in with their key-phrase, they were presented with a similarly “clean” user interface, but with two additional buttons: Waypoint and Avatar. This allowed us to pre-program one “costume” and one “starting position” for each performer. Using a JSON file, we could update these roles and corresponding assets (almost) on the fly by publishing an updated version. Features like this may not seem like a big deal until you’ve performed without them. As we continue our collaboration, we would ideally develop a robust toolkit that turns most show needs into a hot key.

The Stage Manager was given full administrative access with a custom “cue board” that could be programmed to summon props, animations, media clips, scene changes, etc. This “system” was Roman’s dream design and he tireless worked on updates throughout the run of #OnBoardXR as a gift for the performance community to make it easier to perform in virtual reality.

TICKETING

We offered pay-what-you-can tickets for either an interactive ticket (inside the experience) or non-interactive ticket (link to watch a secondary livestream on YouTube) via Eventbrite and emailed links to the private room at XRTHEATER.LIVE (similar to Zoom Theater room links) for each show. We sold out all shows, including our invited dress rehearsal using word of mouth the week of the show, without any major marketing or promotional push.

We were pleasantly surprised to discover that 64% of our attendees chose to pay for their ticket, with 27% of paying attendees choosing to pay $20+ and the average being just above $12. Early survey feedback shows our audience was mostly early adopters to XR technologies who self-identified as weekly patrons of live performance events prior to the pandemic and are familiar with avant guard, experimental theater. A majority of survey respondents priced the ticket value for future events like #OnBoardXR as $10-$15.

For this initial run our team’s footprint was quite large (18 total) so we had to limit capacity to around 25 interactive tickets to reduce overloading the server. The livestream concurrent views typically doubled our attendees (many who also chose to pay for a non-interactive ticket) and provided a “monitor” for our team outside the experience. In the future, double-casting and understudies might help open up more tickets and reduce failure points.

Hubs also has an option to enable “Lobby Ghosts” which allow the user to navigate their POV around the space without an avatar, thus saving render costs. Many experiences also limit the audience’s audio to enhance performance. Early projections suggest this could increase our in-experience capacity to be more similar to a traditional 99-seat theater.

Our retention was fantastic with almost all our attendees staying through the entire 60 minute journey, with only a handful (throughout the entire run) dropping off due to technical difficulties from their own client. However several users did have to refresh during the show, especially when using first generation headsets that seem to struggle with purging their cache after scene switching, attempting to retain all previous media/objects.

With more streamlined production, increased capacity and tiers of interactivity, we begin to see an early path to a more sustainable model for presenting work in WebXR.